31 mai // 03 juin 2012

Festival

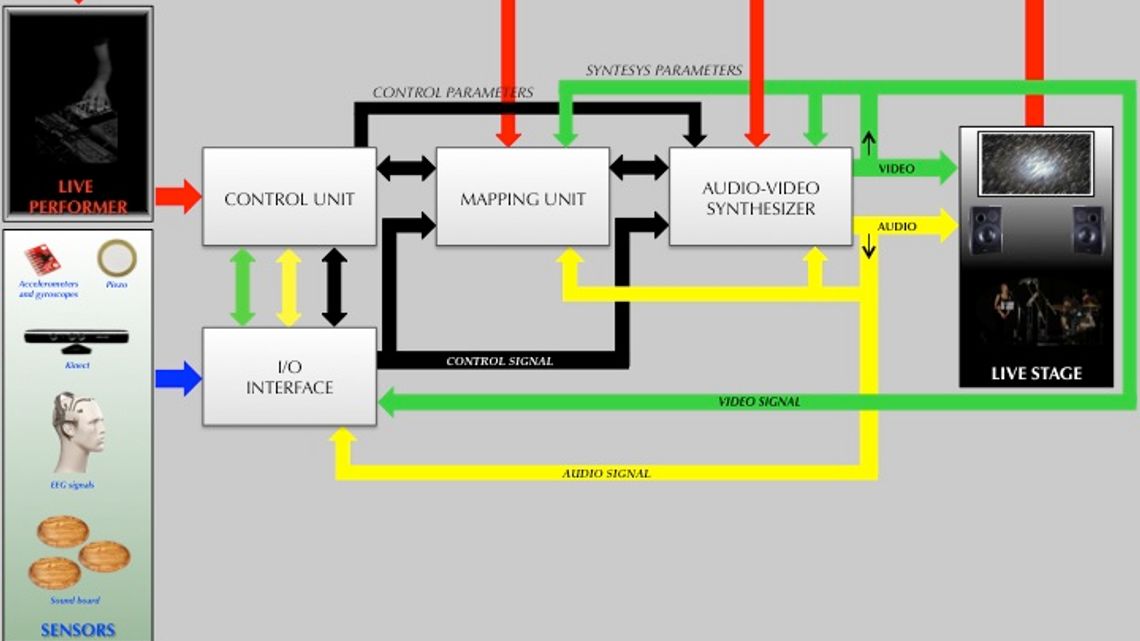

[Texte disponible uniquement en anglais] We develop a system interface that allows an electronic music composer to plan and conduct the musical expressivity of a performer. For musical expressivity we mean all those execution techniques and modalities that a performer has to follow in order to satisfy common musical aesthetics, as well as the desiderata of the composer. The proposed interface is able to transform few input parameters in many sound synthesis parameters. Especially, we focus our attention on mapping strategies based on Neural Network to solve the problem of electronic music expressivity.

The synthesis process was realized by means of our sound synthesizer “Textures 3.1”.

The sound synthesized with “Texture 3.1” is based on Asynchronous Granular Synthesis algorithm developed by Giorgio Nottoli [Conservatorio di Santa Cecilia, Rome], and is fully implemented with programming language C++.

It generates a grains’ flow in which the way each grain follows the other depends on probabilistic parameters such as grain density (attack/sec), overlapping, synchronism and fade of each grain.

The spectrum generated by the synthesis could be harmonic, expanded or contracted according to the value of frequency exponent parameter; the result will be a sound texture that can change from noisy fragmented sounds, to metallic and tuneless sounds such as bells, finally to harmonic sounds similar to strings or choirs.

The extensive use of sound textures is one of the most characteristic aspects of contemporary music. Textures are particularly interesting when the polyphony is so dense that the voices become almost indistinguishable between themselves. The “Texture”, at this point, becomes part of the sound timbre.

Texture is available both as VST or Audio Unit instrument, both for Windows and Mac OSX systems, and available for free download at

http://mastertas.uniroma2.it/ricerca/TexGrSy.html

The sensors used are of various types: microphones, video cameras, pressure and bending sensors, piezoelectric, gyroscopes and accelerometers and devices that make use of the electroencephalographic signal, such as, one of the most recent examples is the EPOC neuroheadset, able to provide both the signals related to brain waves, both high level signals, indicative of our emotions. In recent years, attention has focused on interfaces, called Human Body Tracking Interface for the detection of human motion and gesture recognition through sensors that can detect objects in three-dimensional spaces. In our system we use the Microsoft Kinect. The Kinect combines, in a single device, an RGB camera, a depth sensor based on infrared technology and a microphone array: it is therefore capable of detecting body movements, gestures recognize and respond to voice commands.

Finally, values recorded from all the sensors were converted into voltage signals and then fed into Arduino Mega board. The Arduino Mega is a microcontroller based on the ATmega1280 processor. It has 54 digital input/output pins (of which 14 can be used as PWM outputs), 16 analog inputs, 4 UARTs (hardware serial ports), a 16 MHz crystal oscillator, a USB connection, a power jack, an ICSP header, and a reset button.

The synthesis process was realized by means of our sound synthesizer “Textures 3.1”.

The sound synthesized with “Texture 3.1” is based on Asynchronous Granular Synthesis algorithm developed by Giorgio Nottoli [Conservatorio di Santa Cecilia, Rome], and is fully implemented with programming language C++.

It generates a grains’ flow in which the way each grain follows the other depends on probabilistic parameters such as grain density (attack/sec), overlapping, synchronism and fade of each grain.

The spectrum generated by the synthesis could be harmonic, expanded or contracted according to the value of frequency exponent parameter; the result will be a sound texture that can change from noisy fragmented sounds, to metallic and tuneless sounds such as bells, finally to harmonic sounds similar to strings or choirs.

The extensive use of sound textures is one of the most characteristic aspects of contemporary music. Textures are particularly interesting when the polyphony is so dense that the voices become almost indistinguishable between themselves. The “Texture”, at this point, becomes part of the sound timbre.

Texture is available both as VST or Audio Unit instrument, both for Windows and Mac OSX systems, and available for free download at

http://mastertas.uniroma2.it/ricerca/TexGrSy.html

The sensors used are of various types: microphones, video cameras, pressure and bending sensors, piezoelectric, gyroscopes and accelerometers and devices that make use of the electroencephalographic signal, such as, one of the most recent examples is the EPOC neuroheadset, able to provide both the signals related to brain waves, both high level signals, indicative of our emotions. In recent years, attention has focused on interfaces, called Human Body Tracking Interface for the detection of human motion and gesture recognition through sensors that can detect objects in three-dimensional spaces. In our system we use the Microsoft Kinect. The Kinect combines, in a single device, an RGB camera, a depth sensor based on infrared technology and a microphone array: it is therefore capable of detecting body movements, gestures recognize and respond to voice commands.

Finally, values recorded from all the sensors were converted into voltage signals and then fed into Arduino Mega board. The Arduino Mega is a microcontroller based on the ATmega1280 processor. It has 54 digital input/output pins (of which 14 can be used as PWM outputs), 16 analog inputs, 4 UARTs (hardware serial ports), a 16 MHz crystal oscillator, a USB connection, a power jack, an ICSP header, and a reset button.

Durée (minutes)

30

Ce qui est necessaire

Two amplified loudspeakers, a 8-channel mixer and one microphone